Scientists Crack the Code: Brain Device Successfully Reads Inner Speech for the First Time

Imagine typing a text message or controlling a computer simply by thinking the words – no speaking, no hand movements, just pure thought. This science fiction scenario moved closer to reality as researchers unveiled the first brain-computer interface capable of accurately decoding inner speech, the silent mental conversations we have with ourselves countless times each day.

Breaking the Neural Code

A team of neuroscientists at New York University has achieved what many considered impossible: translating the brain's electrical patterns into recognizable words when people silently speak to themselves. Published in Nature Neuroscience, their groundbreaking study demonstrates that specialized electrodes can capture and decode the subtle neural signals generated during inner speech with remarkable accuracy.

The implications are staggering. For the millions of people worldwide who have lost their ability to speak due to stroke, ALS, or other neurological conditions, this technology offers the tantalizing possibility of communicating their thoughts directly to the world.

How Silent Thoughts Become Digital Words

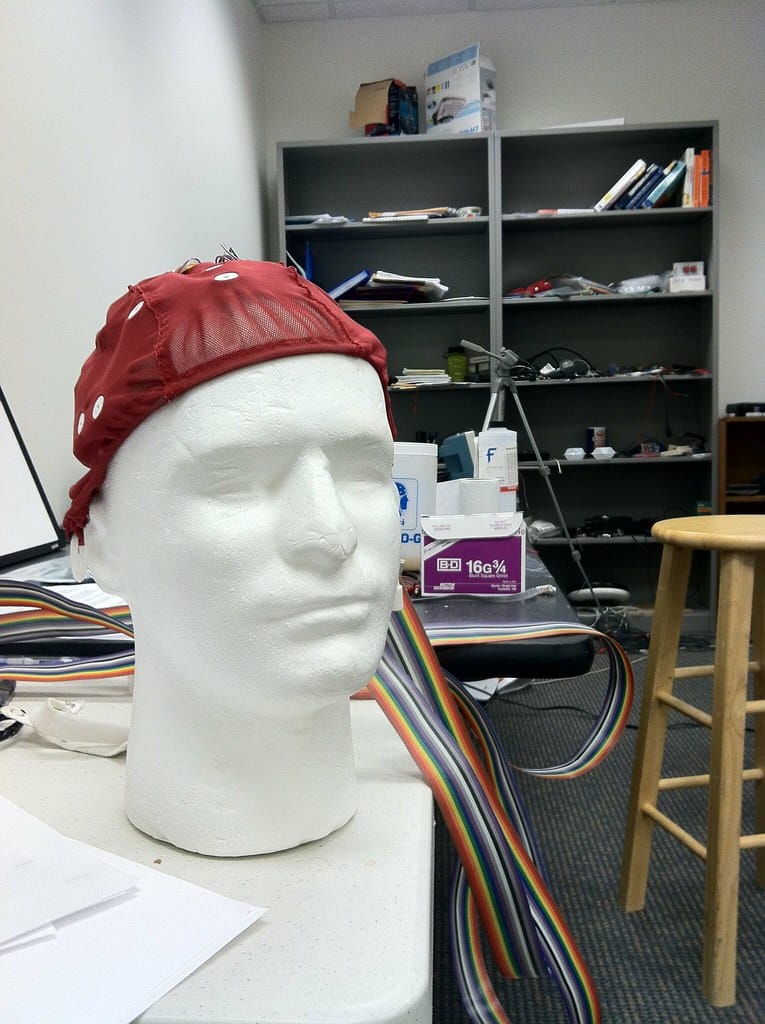

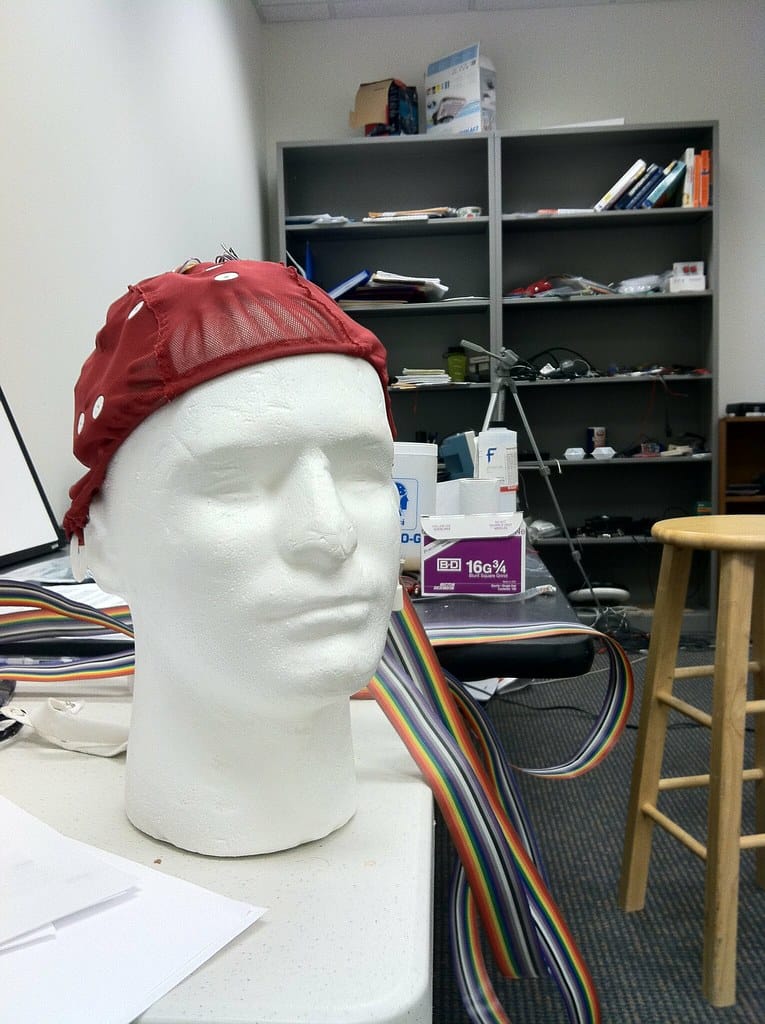

The research team worked with epilepsy patients who already had electrodes implanted in their brains for medical monitoring. These participants were asked to silently recite words and phrases while the device recorded their neural activity. Using advanced machine learning algorithms, the researchers identified distinct patterns of brain activity associated with different words and sounds.

"What we've discovered is that when you think words to yourself, your brain activates many of the same regions involved in actually speaking those words out loud," explains lead researcher Dr. Sarah Chen. "The signals are there – we just needed to learn how to read them."

The system achieved an accuracy rate of 79% when decoding individual words, and even higher rates when working with common phrases and sentences. While this may seem modest, it represents a quantum leap from previous attempts that struggled to reach even 40% accuracy.

Beyond Science Fiction: Real-World Applications

Medical Breakthroughs on the Horizon

The most immediate beneficiaries of this technology could be patients with locked-in syndrome, where individuals remain mentally alert but cannot move or speak due to neurological damage. Currently, these patients often communicate through painstaking methods like eye movements to spell out letters one by one.

Clinical trials are already being planned to test the device with ALS patients, who gradually lose their ability to speak as the disease progresses. The technology could allow them to maintain natural communication even as their physical capabilities deteriorate.

The Future of Human-Computer Interaction

Beyond medical applications, inner speech recognition could revolutionize how we interact with technology. Imagine composing emails during your commute, controlling smart home devices without voice commands, or operating machinery in noisy environments – all through thought alone.

Tech giants including Meta and Google have invested heavily in brain-computer interface research, recognizing the potential to create entirely new categories of products and services.

Navigating Ethical Territory

This breakthrough raises profound questions about privacy and mental autonomy. If devices can read our inner speech, what happens to the sanctuary of our private thoughts? Researchers emphasize that current technology requires deliberate, conscious effort to generate readable signals – your random thoughts remain private.

"The system only works when someone intentionally tries to communicate," clarifies Dr. Chen. "It cannot access spontaneous thoughts or memories without the person's active participation."

Nevertheless, ethicists are calling for robust safeguards and regulations before the technology becomes widely available. Questions about data security, consent, and potential misuse must be addressed as the field advances.

The Road Ahead

While this first-generation device requires surgical implantation, researchers are already working on less invasive alternatives. Some teams are exploring external devices that could detect neural signals through the skull, though these face significant technical challenges.

The current system's vocabulary remains limited to several hundred words, but researchers expect rapid improvements as machine learning algorithms become more sophisticated and training datasets expand.

A New Chapter in Human Communication

The successful decoding of inner speech marks a pivotal moment in neuroscience and human-computer interaction. While significant challenges remain – from improving accuracy to addressing ethical concerns – we're witnessing the birth of a technology that could fundamentally change how humans communicate and interact with the digital world.

For millions of people living with communication disabilities, this research offers genuine hope. And for the rest of us, it provides a glimpse into a future where the boundary between thought and action continues to blur, opening possibilities we're only beginning to imagine.